Generative AI is Costing Us Our Humanity

There’s an ad on the air right now for Meta’s (the parent company of Facebook) new AI model. In the ad, a woman is tasked with hosting a book club at her house. She prepares for the book club (of Herman Melville’s Moby Dick) by asking her Meta AI assistant about fun ways she could decorate her house in a nautical theme and for suggestions for whale-based puns for her appetizers, but then, at the last moment, when her guests are finally in her house, she ducks into the hall and asks the AI, “Hey, Meta, what are some discussion starters for Moby Dick?”

EXCUSE ME? Why on earth are you even hosting a book club if you have no interest in critically engaging with the book you’re reading? You’re asking the computer for discussion starters??

There is something doubly sinister about this ad since Meta has been in court recently having to defend its use of a vast scheme of copyright infringement (to the tune of 7 million pirated books) to train the very AI that is now recommending to the would-be book club host questions she can ask her guests about the book they’re all supposed have read—arguing that “none of Plaintiffs works has economic value, individually, as training data,” (Vanity Fair, Apr. 15, 2025) and that mass pirating of these 7 million books constituted “fair use” (Reuters, Mar. 25, 2025). Yet without those pirated books, they wouldn’t have an AI! Quite the argument from the capitalists running Meta that, actually, if you’re an author or creative your labor doesn’t have value. As the ad ends, the AI tells the book club host to begin the discussion with questions of “revenge.” Perhaps not a great idea to put in the minds of authors whose works have been exploited to train it. Excellent move, Zuckerberg.

But this ad is merely one of many, from myriad other companies, that herald the coming of a new age of technological utopia, free from such burdens as having to read texts from your friends or remember the names of people you met days or weeks ago. I’ve seen ads for Google Gemini in which Google wants me to know that I can ask their AI to reply to texts or emails “in the style of Shakespeare.” You know how else you can do that? By reading Shakespeare for yourself. It’s not difficult! Community theaters perform Shakespeare all the time! Go see a show! A different Meta ad shows a man—hoping to impress his girlfriend’s NASA-employed father—ask, “Hey, Meta, what’s thermodynamics?” I know most of us haven’t been in school for a few years, but come on, guys. What’s next? “Hey, Meta, how do I tie my shoes?” “Hey, Meta, what is my kid allergic to?” “Hey, Meta, is it okay to shoot myself in the foot with a nail gun?”

I’ve been served ads on Twitter for AI-generated episodes of cancelled TV shows, and for apps that will provide AI-generated theological insights (like an AI-generated Rabbi teaching you about the Torah). Last year a Catholic advocacy group had to “defrock” its AI-powered digital priest after it provided one too many dubious answers to would-be truth seekers, such as the acceptability of baptizing your baby in Gatorade (Futurism, Apr. 25, 2024). When I first read about that story I was reminded of the 2011 Paul Bettany action-vehicle Priest, in which Paul Bettany’s titular priest lives under an oppressive theocracy that has, amongst other things, automated confessors by which the populace can absolve themselves. The film is clear though that this process is undesirable—it is cold and impersonal and leaves the troubled soul seeking absolution often in a worse state than when they arrived. Some things should not be automated.

Last year Apple released an ad for their upgraded version of Siri starring The Last of Us star Bella Ramsey. In the ad Bella sees someone across the room who looks familiar, but whose name they cannot remember. Luckily for them, the AI in their fancy, new iPhone remembers who the unknown person is (pulling data from an archived calendar meeting), and reveals the stranger’s name. Around the time this ad aired, a clip began to circulate on Twitter of Twitch streamer Northernlion’s reaction to it (to which I wholeheartedly agree):

“These are human experiences. It’s not a weakness to not remember somebody’s name—you just see them and you go, ‘Oh, you’re…’ and then [the person you forgot] goes, ‘Stephen!’ You don’t need technology to paper over that. That’s part of the experience of being human! …the more these systems get built… the less human we become because all the imperfections get sanded off in favor of some kind of curated farce that is not a representation of what it means to be alive on planet Earth. I consider it an affront to the human spirit.”

Northernlion’s reaction mirrored that of famed animator and film director Hayao Miyazaki, who, when approached some years ago by some developers who wished to “build a machine that can draw pictures like humans do” said that he would never wish to incorporate such a technology into his work and that he thought the technology was “an insult to life itself.” In the documentary in which this encounter occurred, this scene is followed by one in which Miyazaki muses:

“I feel like we are nearing the end times. We humans are losing faith in ourselves.”

The cruel end of that arc is that OpenAI—another of these genAI companies—and its soulless, money-hungry CEO Sam Altman recently released the newest version of their ChatGPT model by advertising how it could effortlessly make images in the style of the films of Miyazaki’s Studio Ghibli. Altman even changed his own Twitter profile image to that of a “Ghibli-fied” image of his previous headshot.

Yet, unlike instances where other AI companies have tried to play dumb and act like they don’t know or didn’t realize they were using copyrighted material to train their models, OpenAI seemed to revel in the fact that they clearly trained their model on the films of Studio Ghibli and the work of Hayao Miyazaki. How else could it have learned to do this? The trend was so widespread that one of my former co-workers, a documentary filmmaker, even Ghiblified some scenes from one of his own documentaries, converting the once beautifully composed and color-graded photography into disgusting, unnerving, soupy slop. But the comments section below the video was full of folks praising how wonderful and exciting the slop was: “This is so cool.” / “Wow!” / “This is amazing!” / “Awesome!” / and myriad instances of fire and shocked gasping emojis.

I think often these days of a line from Miyazaki’s Nausicaa of the Valley of the Wind:

“Who made such a terrible mess of the world?”

Sam Altman is more notorious than most other genAI companys’ CEOs in his willingness to complete miss the point of works he admires. The bastardization of Studio Ghibli’s lovingly handcrafted animated films is merely the most recent knife he has plunged into the backs of artists. Last year, OpenAI landed in hot water after they unveiled a speaking model of ChatGPT whose voice seemed eerily reminiscent of that of famed film actress Scarlett Johansson. Upon its release and the discovery that it sounded like her, Johansson revealed that Altman had reached out to her agent some 9 months prior to the launch of OpenAI’s speaking GPT model asking if she’d consider being the voice of their AI (The Guardian, May 20, 2024), but had declined to take the role.

Johansson famously voiced the AI “Samantha” in the 2013 Spike Jonze film Her. In the film, a lonely Joaquin Phoenix purchases a newly available AI only to eventually discover that she is not simply a welcome assistant in his life but that he has truly fallen in love with her. Yet the point of the film is not “hey, look at how cool these digital assistants can be!” but rather how such devices can actually push us, as humans, further away from each other. In the film we see Joaquin and other users of these AIs in their search for companionship actually become more distant with the other humans in their lives, and become more reclusive as they form deeper connections with their AI partners instead. Knowing the point of the film, as I imagine Johansson does, she obviously didn’t want to be a part of OpenAI’s machinations. Altman, not knowing the point of the film (or being willfully ignorant of it), instead decided to ignore Johansson’s declination and—as the meme goes—“successfully build the Torment Nexus from the famous science-fiction novel Don’t Build the Torment Nexus” (and like a conniving sea-witch steal Johansson’s voice in the process).

But with much of genAI development the theft is the point. If you explore the replies to many of the more popular Ghiblifications shared on Twitter, you’d see people rightfully pointing out how antithetical such a thing was to the philosophies of the creator (Miyazaki) from which the house style of these images were stolen, only for the pro-AI users to gleefully exclaim, “Who cares what he thinks?” (or any number of similar variations on that thought). When one of the recent iterations of Google Gemini was released, users were in awe of how easily it was able to remove watermarks from watermarked images, noting that—when people pointed out to them at this was stealing—such generation was not stealing in the traditional sense because the AI was not taking the watermark off of the watermarked image but rather generating an entirely new image that was exactly the same except for the missing watermarks—thereby Ship of Theseus-ing image theft.

What are we doing here??

Not to sound like some sort of Woke Commie, but by my observation this is merely the denouement of the decades-long commodification and business-ification of the arts under capitalism. My brother and I were talking recently about the plight of artists, and how it seems that—in lieu of broad public funding for the arts—there are so few of the super-wealthy willing to cultivate the next Mozart, Michelangelo, or da Vinci. But the reason in the modern age that this doesn’t happen in quite simple: business in the capitalistic paradigm is all about profit and return-on-investment, and as a hundred years of filmmaking (merely as one example of art as a business) have shown, there is not always (in fact rarely) a substantial return on investment. Art is a risky business proposition, and has never been a surefire means of generating profit. And these days, even products that were once surefire hits—like the Marvel or Star Wars films—are surefire no longer. Many lose their parent companies hundreds of millions of dollars in one fell swoop.

In 2023 Award-winning film director David Fincher spoke of how and why Netflix decided not to renew his critically-acclaimed, Emmy-nominated TV show Mindhunter, saying “It’s a very expensive show and, in the eyes of Netflix, we didn’t attract enough of an audience to justify such an investment,” (The Independent, Feb. 23, 2023). Martin Scorsese, one of this era’s preeminent filmmakers, said of the current obsession with streaming and box office numbers, “As a filmmaker, and as a person who can’t imagine life without cinema, I always find it really insulting,” noting that through the lens of this focus on profit “cinema is devalued, demeaned, belittled from all sides… It’s kind of repulsive,” (Indiewire, Oct. 13, 2022). Artists are expensive, and they need time to make their art well. We might recall the triangle of project management. At its three points are the words Fast, Cheap, and Good, and you—the would-be project manager—are presented with the unkind knowledge that you can only choose two of those points at once. We shouldn’t be surprised then that those business people who’re resentful of the cost, both financially and otherwise, of working with expensive or exacting talent would latch on to these tools that can generate comparable work (in their minds, being non-artists) almost instantly and at a much lower cost. Suddenly you can have all three points on the triangle—so long as your concept of “Good” is pretty flexible.

A key component of the recent Writers’ and Actors’ strikes were over concerns that studio executives were beginning to move to automate the creation of film, television, and video games (some anime studios have already begun testing AI-generated dubbing of their shows, and AI-generated graphics have begun filtering into films and TV shows [Late Night with the Devil, Secret Invasion, and Best Picture nominee The Brutalist being three recent examples]). AI-generated book covers have already broadly infiltrated the indie publishing space but have even begun to appear on the covers of traditionally published books (not even to speak of “authors” whose whole books are generated by AI). Fellow indie author Dave Lawson was even approached recently by Amazon (through a form email) earlier this month to enroll his books in their “Virtual Voice Beta” which pitched the ability to release his stories as audiobooks narrated by AI, despite Amazon seemingly not realizing (or ignoring) that Dave, at that very moment, had a human-narrated version of his book awaiting approval for release by Amazon.

To the businessman, real artists get in the way of profit, and so they must be cast aside at all costs. This too despite the fact that, since these AI models making these images/sounds/strings of words are trained on the previously existing works of real human people, they cannot ever iterate and create new forms, they can only replicate and amalgamate from what already exists—thus many of us have observed the homogenous texture of so much of the AI generation that has poisoned the internet. It is, despite what its apologists would have you think, all the same, and it is all bad.

I recently re-read Aldous Huxley’s Brave New World for the first time since high school, and I could not help but see it as a kind of treatise against generative AI. I said as much in my review of the book for SFF Insiders, but I’ll summarize here:

In Brave New World, the setting of the story is fetishistically automated. People aren’t born, they are decanted from a kind of biological assembly line built in worship to Henry Ford (people in this world no longer make the sign of the cross but rather the sign of the “T” for Ford’s Model T). And these people are, by the mechanisms of this assembly line, often clones of one another. Uniformity is the goal of society, in appearance and in social mores. This is the price that has been paid for civil stability.

So when a new character—a “Savage” born to a “civilized” mother who’d been left out in the Arizona desert on a holiday gone wrong—is introduced into this world in the second act, he immediate perceives the wrongness of it, observing the ways in which pain and discomfort are drugged away at every moment, how the path of your life is predestined by the mechanisms of world order, how there is no meaningful art and no meaningful ways for people to express their emotions or their deeper thoughts. John—“the Savage”—says that “getting rid of everything unpleasant instead of learning to put up with it” is an untenable position for any society. “Nothing costs enough,” he says. Solaris author Stanislaw Lem would reiterate this idea years later in his essay “Science and Cosmology” (1977), saying that “where anything comes easy, nothing can be of value,” and noting that through the struggle of artistic creation we can reveal “the indescribable richness that can be conveyed only by real life.” And now to directly quote myself from the SFF Insiders review of Brave New World:

“This is the heart of the issue. Human life, our lives, is/are full of struggle, whether we like it or not. But this crucible, these little and large crucibles through which we wander every day, are what make us who we are, what make us human. We need that conflict and need that contrast. A diamond shines much brighter on a black satin background than a white one. In removing the hurdles to the creation of ‘art,’ we’ve removed the very thing that makes art worth making: the process, the struggle, the act of creation.”

And, of course, none of this even touches on the other monstrous issues regarding generative AI and AI development more broadly (chiefly its exceptional power and water-hungriness gobbling up natural resources to what end? To make a million terrible jpegs? and its clear risk of misuse by bad actors to spread misinformation and propaganda), how AI keeps being shoved into our faces despite the fact that a 2025 study by Colombia Journalism Review found that the top eight AI chatbots consistently present[ed] “confident presentations of incorrect information, misleading attributions… and inconsistent information retrieval practices,” (yet Elon Musk insists that the teaching of human students can and should be automated, a sentiment shared by Secretary of Education Linda McMahon) and a study by leading AI developer Microsoft plainly stated that “a key irony of automation is that by mechanising routine tasks and leaving exception-handling to the human user, you deprive the user of the routine opportunities to practice their judgement and strengthen their cognitive musculature, leaving them atrophied and unprepared when the exceptions do arise.” Even so, take a peek beneath any recently viral tweet and you’ll see someone asking Grok (developed by Elon’s own xAI) or Perplexity to “Explain this.” And you can’t even tell these people “Just Google it!” anymore because Google too has been prioritizing AI-summarized search results over everything else (in the process telling people that, amongst other things, to prepare a pizza you can add “1/8 cup of non-toxic glue to the sauce to give it more tackiness” [Forbes, May 31, 2024]).

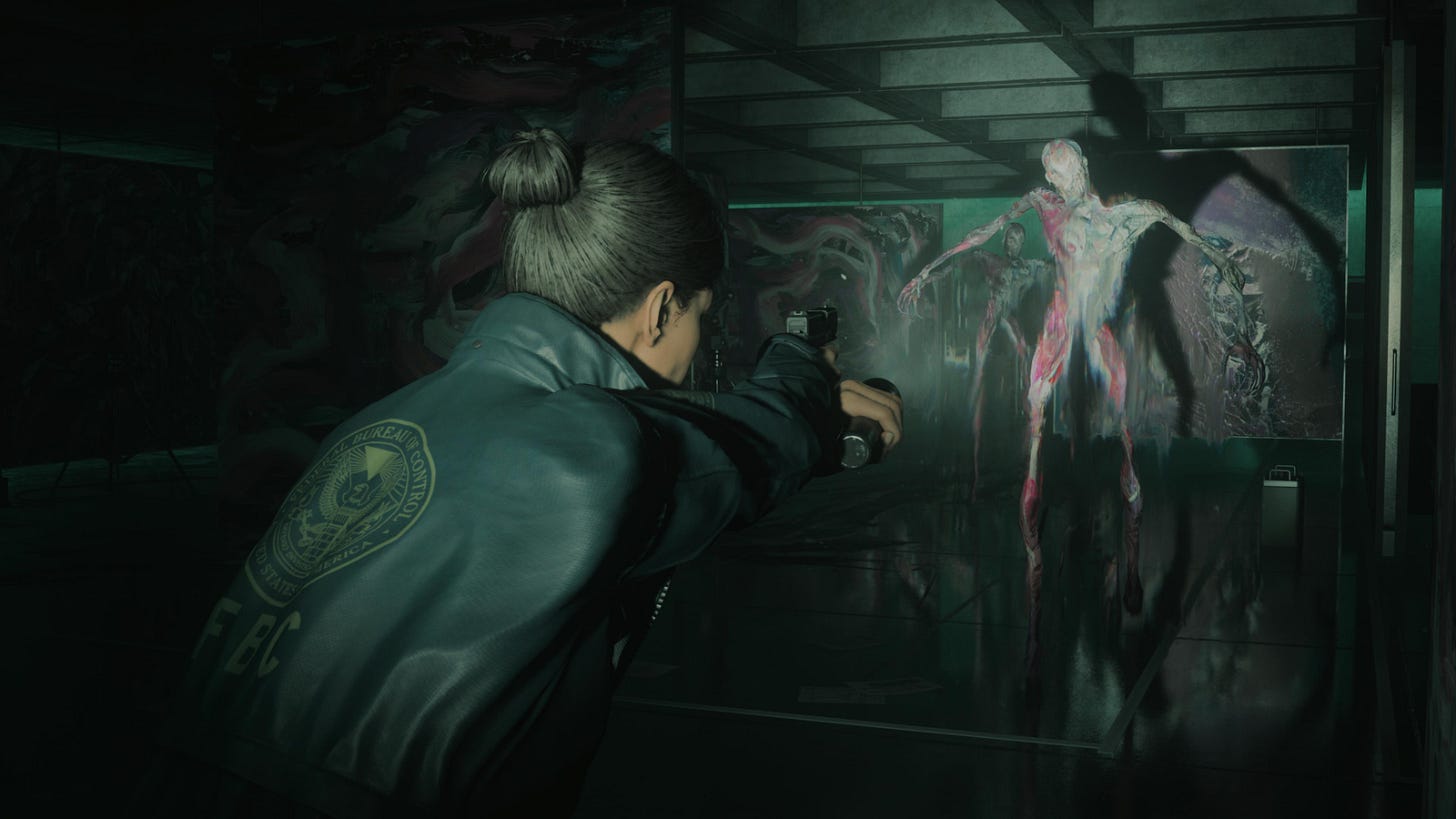

In the 2010 videogame Alan Wake, the titular Alan Wake—a writer—takes a Stephen-King-protagonist-inspired vacation to Bright Falls in the Pacific Northwest to try to find some inspiration for his next novel. There, in a secluded cabin on the shore of the mystically-suggestively-named Cauldron Lake, Alan discovers that the words he’s been writing down in his manuscript have begun to come to life, ripped from the page by some mysterious force that resides in the lake. In 2023’s Alan Wake 2, one of the DLC (downloadable content) story expansions—The Lake House—takes place in a government research facility on the shores of that very same lake, at which the secretive Federal Bureau of Control is attempting to artificially replicate Alan Wake’s authorial altering of reality via thousands of “Automated Typing Device”(s) that have been programmed to mimic his writing style. These ATDs produce similar results, but ones which are always just a little bit off—to eventual horrifying ends.

The subtext of The Lake House is not subtle, Alan Wake creator Sam Lake surely had generative AI on his mind when his team at Remedy Games were planning the story of The Lake House. But Sam has tapped into a truth about the creative process and about artists: we have power. Unfortunately, that power is seen by the developers of generative AI models as merely fuel—exploitively reducing years of training, toil, and expertise into simply data, into raw material for the replicators, re: Facebook claiming that “[no work of art] has economic value, individually, as training data.” To them we and our work are little better than oil, greasing the wheels of their money-printing machine so that they—who are already far-wealthier than we could possibly dream—might become a little wealthier at our expense.

Of all of George Orwell’s warnings for the future in 1984, I find myself often thinking these days about this one:

“The fields are cultivated with horse plows while books are written by machinery.”

Make the machines plow the fields! Make them descend into the earth to mine for coal! Let the people be free to Create! I do not wish to live in a world where human beings are made to toil in factories while machines produce plays and movies and books and TV shows and music and comics and games. What kind of world would that be? And what will we become if we allow it to happen?

Amazing work, Jake. Spot on.

What good points- and written by a real person too!